Category: Computer Tech

-

Big Tech Abuse – Meta & Google

Facebook has been at the forefront of social media user and privacy abuse for years. Being caught out in the Cambridge Analytica scandal was not an isolated event. Newly unsealed court documents from a private antitrust lawsuit detail abuses of trust that are cynical and unethical at the very minimum. The following screenshot of a…

-

AI and the truth

We are possibly at one of the greatest inflection points in human history. Strong words, but hear me out … In recent years, it’s become trendy to push one’s own truth in the absence of fact. This trend is known as wokeism, and has been and is being pushed in many social areas such as…

-

FortiGate SSL VPN security

Most firewall engineers using FortiGate will implement the SSL VPN function using the standard method as indicated in the documentation. Fortinet do provide additional information on securing SSL VPN but there’s even more you can do. I’ll go through a number of essential tasks to cover when implementing SSL VPN and some options to improve…

-

Google Chrome and privacy – opposing forces?

Audio transcription The Google Chrome browser was first released in Sep 2008 as an alternative to rival browsers, to “address perceived shortcomings in those browsers and to support complex web applications”. Google also wanted a browser that could better integrate with its own web services and technologies. That last statement speaks to the heart of…

-

Plex Discover: a lesson in privacy

Audio Transcript It’s a common refrain: my data isn’t important so I don’t need to protect it, I’m unimportant so my information doesn’t matter … There’s recently been some horror stories of overly ‘ambitious’ policing of internet-related activities. Like the father who sent pictures of his son with a developing issue to their doctor for…

-

PKI, processes and security

Audio transcript PKI, or Public Key Infrastructure, is the general term used for establishing and managing public key encryption, one of the most common forms of internet encryption. It is baked into every web browser (and many other applications) in use today to secure traffic across the public internet, but organizations can also deploy it…

-

SSL/TLS Certificate lifetime redux

I wrote an article in 2020 about SSL/TLS Certificate lifetimes, the upshot of which was that the certificate/browser industry had just moved to 1yr (398 days to be precise) certificate expiries. I noted the following: There have been a number of attempts over the years to reduce the lifetime of certificates as they apply to…

-

IT Security for the Small Business

Structured IT Security is generally seen as the domain of the medium to large enterprise as it can be an expensive exercise to implement properly, and requires hard-to-find skills. However, there are a lot of areas a small business can tackle to improve their security status considerably without breaking the bank. I’ll simplify this process…

-

GPC / Global Privacy Control

Do Not Track It’s quite amazing to think that DNT or Do Not Track was first proposed back in 2009 – 13 years ago. This was a first-stab method at the issue of website privacy and the horrendous marketing machine that is the internet. DNT was designed to allow users to opt-out of website tracking,…

-

Social Media security

Keeping yourself secure on the internet remains a very important component of our daily lives seeing as internet access is so ingrained in day-to-day activities. Think ride sharing, online banking, retail shopping, email and so on. Social media specifically remains a prime attack vector for malicious activities impacting on many internet users’ security. Yet the…

-

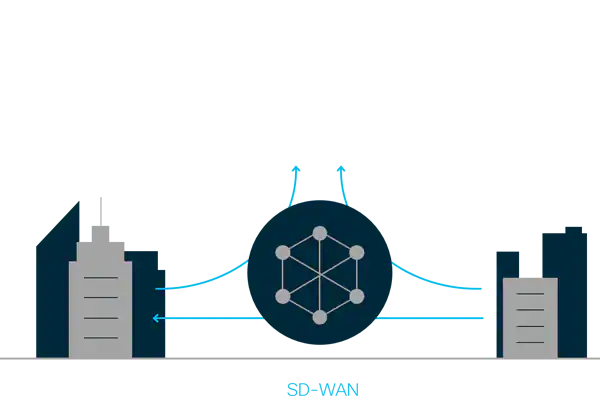

FortiGate SD-WAN

SD-WAN (software defined WAN) is a topic that is much discussed in the last couple of years but one that is also the least understood. One of the reasons for this is that there are different implementations of SD-WAN leading to misunderstandings in how SD-WAN is used. So what is SD-WAN? It’s an overlay technology…

-

The little camera that could

IT and Network Security is a tough arena. Keeping networks, systems and data secure from what can only be called a total onslaught of malware and other malicious attacks, is a difficult task. What makes the task even more difficult is the general indifference of (especially) SMEs to the potential harm that can be caused…

-

Storm in a WhatsApp teacup?

Facebook’s recent update of the Terms of Service for Whatsapp has got a lot of people riled up. And quite rightly so. The core of this issue is not privacy of information as many believe, but rather pure business economics – let’s cover the basics first. There are 2 primary considerations for using cloud services…

-

Mikrotik guest VLAN with Cap AC

It’s past time to create additional VLANs on my home network for IoT and guests, so I decided to take the plunge and see what configuration was required on my Mikrotik AP. The basic physical network topology is: internet <—> firewall <—> L2 switches <—> CapAC <—> users As I’m not using an L3 switch…

-

Centos bails

Wow. What a week. I’m almost not sure to start but let’s give this a go. Red Hat’s had a pretty hard week convincing Centos users that their announcement Tues (15th Dec) deprecating Centos 8 (and Centos downstream in general), is A Good Thing(tm). How did this come about? Centos is one of the most…